Blog Directory

Thank you! Your submission has been received!

Oops! Something went wrong while submitting the form.

Industry Insights

The UK’s New “Less Healthy” Food & Drink Ad Rules Just Changed Influencer Marketing

by

Traackr

Read blog post

Industry Insights

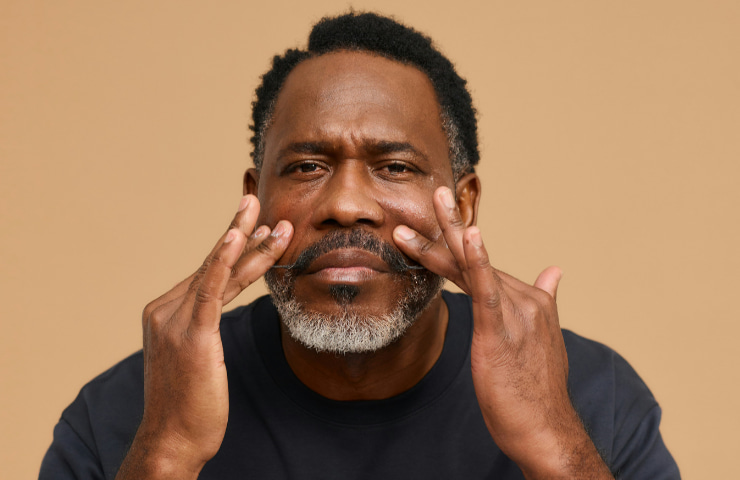

Rising and Top Influencers #Over40 in the Beauty Industry

by

Olivia Osborne

Read blog post

Marketing Strategy

Leveraging Data to Future-Proof Influencer Marketing

by

Antoinette Siu

Read blog post

Industry Insights

TikTok’s U.S. Algorithm Shift: How Brands Can Evolve Their Influencer Strategy

by

Holly Jackson

Read blog post

Product Innovation

Evaluate Creator Content Fit in Seconds with Traackr’s AI Content Summaries

by

Traackr

Read blog post

.png)

Marketing Strategy

Mastering YouTube Creator Marketing: Why YouTube Converts Confident Shoppers

by

Olivia Osborne

Read blog post

Marketing Strategy

From Vetting to Crisis Response: A Creator Risk Management Framework

by

Miakel D. Williams

Read blog post

Marketing Strategy

Precision Data Is the New Creator Marketing Superpower

by

Antoinette Siu

Read blog post

Success Stories

Beyond the Celebrity: How Rhode Skincare Built a Scalable Creator Ecosystem

by

Traackr

Read blog post

Marketing Strategy

How Leading Brands Are Scaling Creator Advocacy: Insights From IMPACT

by

Traackr

Read blog post

Marketing Strategy

Why Creator Pay Transparency Is a Smart Strategy for Brands

by

Traackr

Read blog post

Marketing Strategy

5 Strategies To Build Impactful Creator Partnerships: Insights From Top Influencers

by

Olivia Osborne

Read blog post

Marketing Strategy

The Enterprise Playbook for Creator Marketing at Scale

by

Pierre-Loic Assayag

Read blog post

Marketing Strategy

Top Influencer Marketing Strategy Tips From L’Oréal, NYX Cosmetics and Neutrogena

by

Read blog post

Success Stories

Top Black Owned and Founded Beauty Brands in Influencer Marketing

by

Traackr

Read blog post

Marketing Strategy

Preparing Your Influencer Strategy for the Potential TikTok Ban

by

Holly Jackson

Read blog post

Marketing Strategy

CMO Strategy: 5 Golden Marketing Opportunities to Take Your Brand to the Next Level

by

Pierre-Loic Assayag

Read blog post

%25252520(1).png)

Industry Insights

Rising and Top Influencers #Over40 in the Fashion Industry

by

Olivia Osborne

Read blog post

Marketing Strategy

Influencer Marketing Measurement: Why EMV Falls Short and How VIT Offers a Better Alternative

by

Traackr

Read blog post

Level up your creator marketing expertise

Get industry insights and updates straight to your inbox.

Subscribe

_Nick_Fancher_Photos_ID6169.jpg)

%25252520(1).jpeg)

.jpeg)